Probability Theory Basics

Probability theory is a fundamental aspect of statistics and data science, providing the mathematical framework to quantify uncertainty and make predictions based on data. This article delves into the core concepts of probability theory, exploring the nature of probability, independent and dependent events, conditional probability, and Bayes' Theorem.

What is Probability?

Probability is a measure of the likelihood that a particular event will occur. It quantifies uncertainty and is expressed as a number between 0 and 1:

- 0 indicates an impossible event.

- 1 indicates a certain event.

The probability of an event is denoted by , and it is calculated as:

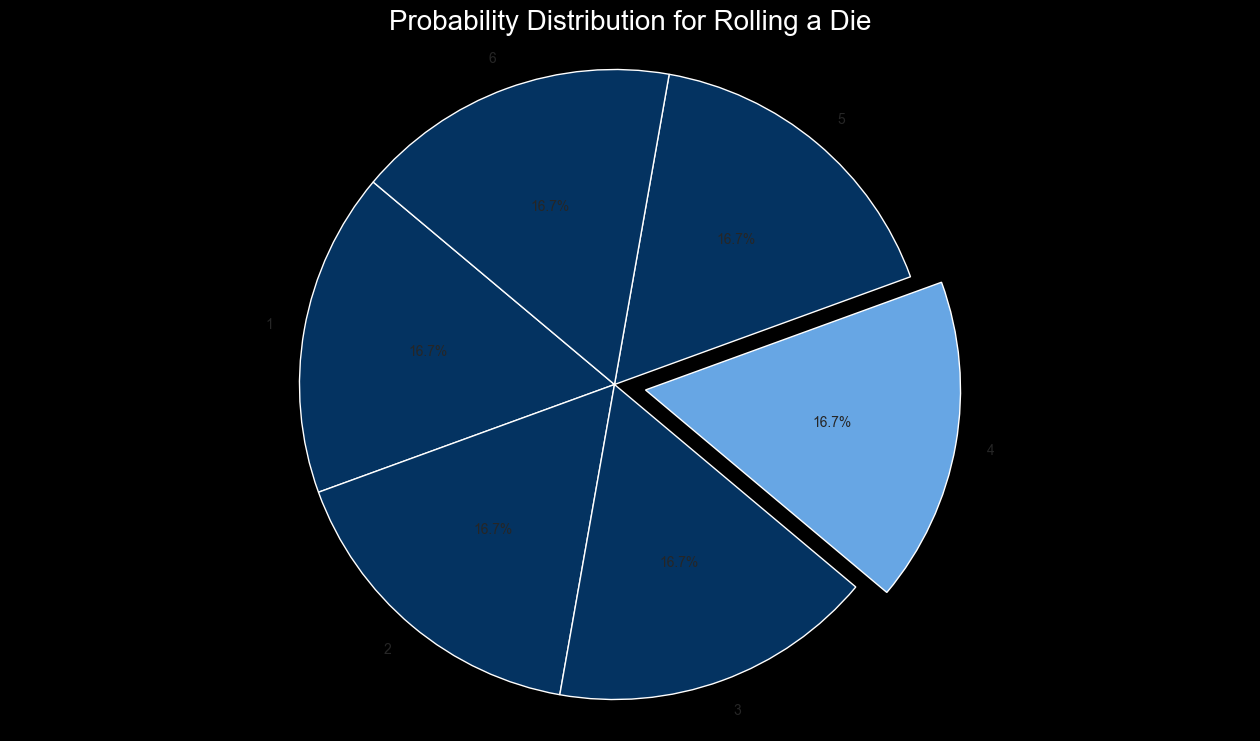

Example: Rolling a Die

Consider rolling a fair six-sided die. The probability of rolling a 4 (event ) is:

Since there is one favorable outcome (rolling a 4) out of six possible outcomes (1, 2, 3, 4, 5, 6), the probability of rolling a 4 is approximately 0.167.

Figure 1: Probability Distribution for Rolling a Die, highlighting the probability of rolling a 4.

Independent and Dependent Events

Events can be categorized as independent or dependent based on whether the occurrence of one event affects the probability of another.

1. Independent Events

Independent events are events where the occurrence of one event does not affect the probability of the other. The probability of two independent events and occurring together is the product of their individual probabilities:

Example: Tossing Two Coins

When tossing two fair coins, the outcome of the first toss does not affect the outcome of the second toss. If is the event of getting a heads on the first toss and is the event of getting a heads on the second toss:

The probability of getting heads on both tosses (event ) is:

2. Dependent Events

Dependent events are events where the occurrence of one event affects the probability of the other. The probability of two dependent events and occurring together is calculated using conditional probability.

Example: Drawing Cards Without Replacement

Consider drawing two cards from a deck without replacement. If is the event of drawing an Ace on the first draw and is the event of drawing an Ace on the second draw, the events are dependent.

- Probability of drawing an Ace first:

- Probability of drawing an Ace on the second draw, given that an Ace was drawn first:

The probability of both events occurring is:

Conditional Probability

Conditional probability is the probability of an event occurring given that another event has already occurred. It is denoted by and is calculated as:

Where:

- is the probability of both events and occurring.

- is the probability of event occurring.

Example: Probability of Drawing a Red Card Given an Ace

Consider a standard deck of 52 cards. Let be the event of drawing a red card, and be the event of drawing an Ace.

- (since there are 2 red Aces in a deck).

- (since there are 4 Aces in a deck).

The conditional probability of drawing a red card given that an Ace is drawn is:

This result shows that if an Ace is drawn, there is a 50% chance it is a red Ace.

Bayes' Theorem

Bayes' Theorem is a powerful tool in probability theory that allows us to update our beliefs based on new evidence. It relates the conditional probability of events and :

Where:

- is the posterior probability of given .

- is the likelihood of given .

- is the prior probability of .

- is the marginal probability of .

Example: Medical Testing

Suppose a diagnostic test for a disease has the following characteristics:

- Sensitivity (true positive rate) =

- Specificity (true negative rate) =

- Prevalence of the disease in the population =

If a person tests positive, what is the probability they actually have the disease?

Using Bayes' Theorem:

Where is calculated as:

Substitute the values:

Finally, calculate the posterior probability:

This result indicates that despite a positive test result, there is only a 16.7% chance that the person actually has the disease, emphasizing the importance of understanding and applying Bayes' Theorem in medical testing and other areas.

Law of Total Probability

The Law of Total Probability is used to calculate the probability of an event based on multiple, mutually exclusive scenarios that cover all possible outcomes. If events are mutually exclusive and exhaustive, then for any event :

Example: Probability of Rain Based on Weather Forecasts

Suppose the probability of rain depends on three different weather forecasts:

And the probabilities of each forecast being accurate are:

Using the Law of Total Probability, the overall probability of rain is:

There is a 66% chance of rain based on the combined accuracy of the forecasts.

Conclusion

Understanding the basics of probability theory is crucial for data science, as it lays the groundwork for statistical inference, machine learning, and decision-making under uncertainty. By mastering concepts such as independent and dependent events, conditional probability, and Bayes' Theorem, you can make more informed decisions based on data.