Laplacian Matrices and Graph Partitioning

Laplacian matrices are a fundamental concept in graph theory with significant applications in unsupervised machine learning, particularly in spectral clustering and graph partitioning. This article explores the mathematical foundations of Laplacian matrices, their role in graph partitioning, and their applications in clustering algorithms.

1. Introduction to Graphs and Matrices

1.1 Graph Representation

A graph consists of a set of vertices (also called nodes) and a set of edges that connect pairs of vertices. Graphs are widely used in computer science and data science to model relationships and structures in data, such as social networks, molecular structures, and transportation systems.

- Adjacency Matrix: The adjacency matrix of a graph is a square matrix where each element represents the presence (or weight) of an edge between vertices and . For an unweighted graph, if there is an edge between and , and otherwise.

1.2 Degree Matrix

The degree matrix is a diagonal matrix where each diagonal element represents the degree of vertex , which is the number of edges connected to it:

The degree matrix plays a critical role in defining the Laplacian matrix of the graph.

2. Laplacian Matrices

2.1 Definition of the Laplacian Matrix

The Laplacian matrix of a graph is defined as:

Where:

- is the degree matrix.

- is the adjacency matrix.

The Laplacian matrix encapsulates the structure of the graph, and its properties are crucial for analyzing the graph’s connectivity and partitioning.

2.2 Properties of the Laplacian Matrix

- Symmetry: The Laplacian matrix is symmetric, i.e., .

- Positive Semi-Definiteness: The Laplacian matrix is positive semi-definite, meaning all its eigenvalues are non-negative.

- Eigenvalues and Eigenvectors: The smallest eigenvalue of the Laplacian matrix is always , corresponding to the eigenvector with constant components (often called the trivial eigenvector). The second smallest eigenvalue (the Fiedler value) and its corresponding eigenvector are particularly important for graph partitioning.

2.3 Normalized Laplacian Matrix

In some cases, it is useful to work with the normalized Laplacian matrix, especially when the graph has nodes with varying degrees. There are two common forms of the normalized Laplacian:

-

Symmetric Normalized Laplacian:

-

Random Walk Normalized Laplacian:

These normalized forms are particularly useful in spectral clustering, as they adjust for the varying degrees of nodes.

3. Graph Partitioning

3.1 The Graph Partitioning Problem

Graph partitioning involves dividing the vertices of a graph into disjoint subsets while minimizing the number of edges between different subsets. This is a critical problem in various applications, such as parallel computing, network analysis, and clustering.

3.2 Min-Cut Problem

One of the classic approaches to graph partitioning is the min-cut problem, which seeks to find a partition that minimizes the total edge weight between different subsets. However, the min-cut approach often results in unbalanced partitions, where one subset is significantly smaller than the other.

3.3 Normalized Cut

To address the limitations of the min-cut, the normalized cut (Ncut) criterion is used, which considers the sizes of the partitions. The normalized cut is defined as:

Where:

- and are the two partitions of the graph.

- is the sum of the edge weights between and .

- and are the total edge weights connected to the vertices in and , respectively.

The normalized cut criterion leads to more balanced partitions, making it particularly useful in applications like image segmentation and clustering.

4. Spectral Clustering and Laplacian Matrices

4.1 Role of Laplacian in Spectral Clustering

Spectral clustering leverages the eigenvalues and eigenvectors of the Laplacian matrix to perform clustering. The key idea is to use the eigenvectors corresponding to the smallest non-zero eigenvalues to embed the graph's nodes in a lower-dimensional space, where traditional clustering algorithms like k-means can be applied.

4.2 Steps in Spectral Clustering

- Compute the Laplacian Matrix: Calculate the Laplacian matrix (or normalized Laplacian) for the graph.

- Eigen Decomposition: Compute the eigenvectors corresponding to the smallest eigenvalues of the Laplacian matrix.

- Embedding: Use these eigenvectors to embed the nodes into a lower-dimensional space.

- Clustering: Apply a clustering algorithm, such as k-means, to the embedded points.

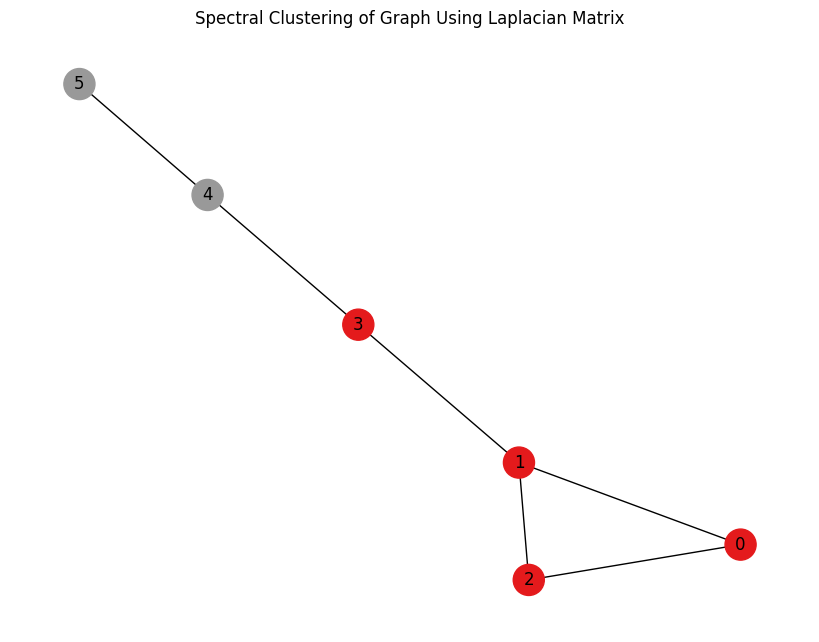

4.3 Example: Spectral Clustering on a Simple Graph

Let's consider a simple graph with 6 nodes. The adjacency matrix is given as:

We then compute the eigenvalues and eigenvectors of . The eigenvectors corresponding to the smallest non-zero eigenvalues can be used to partition the graph into clusters.

Visual Example: Here’s a visual representation of the clustering result.

Figure 1: Spectral Clustering of a Simple Graph Using the Laplacian Matrix.

5. Applications of Laplacian Matrices in Clustering

5.1 Image Segmentation

Laplacian matrices are widely used in image segmentation tasks, where an image is treated as a graph. Spectral clustering can be applied to segment the image into different regions based on pixel similarities.

5.2 Community Detection in Networks

In social network analysis, communities within networks are identified by partitioning the graph into subgraphs. Spectral clustering, using the Laplacian matrix, effectively detects these communities based on connectivity patterns.

5.3 Dimensionality Reduction

The eigenvectors of the Laplacian matrix can also be used for dimensionality reduction in clustering. By projecting data points onto a lower-dimensional space defined by these eigenvectors, clusters can become more distinct and easier to identify.

6. Challenges and Considerations

6.1 Choice of Similarity Measure

The construction of the similarity matrix significantly impacts the performance of spectral clustering. The choice of the similarity measure (e.g., Gaussian kernel, cosine similarity) and parameters (e.g., in the Gaussian kernel) should be carefully considered.

6.2 Computational Complexity

Computing the eigenvalues and eigenvectors of the Laplacian matrix can be computationally expensive, especially for large graphs. Efficient algorithms and approximations are necessary for handling large-scale datasets.

6.3 Number of Clusters

Determining the number of clusters is a common challenge in spectral clustering. Techniques such as the eigenvalue gap heuristic can be used, but they are not foolproof. Cross-validation and domain knowledge may also be required.

7. Conclusion

Laplacian matrices play a crucial role in graph partitioning and spectral clustering, providing a powerful tool for identifying clusters and community structures in complex datasets. Understanding the properties of Laplacian matrices and how they can be used to transform data for clustering is essential for applying these techniques effectively in unsupervised machine learning.

By leveraging the spectral properties of the Laplacian matrix, spectral clustering offers a flexible and robust approach to clustering, capable of handling non-convex clusters and complex data structures. However, careful consideration of similarity measures, computational resources, and the number of clusters is necessary to achieve optimal results.