Tensor Decompositions

Tensor decompositions are a powerful tool in data science and machine learning, particularly when dealing with high-dimensional data that cannot be adequately represented using matrices alone. Tensors generalize matrices to higher dimensions, and tensor decompositions allow for the extraction of latent factors in multi-way data, providing valuable insights and enabling advanced applications such as recommender systems, signal processing, and image compression.

1. Introduction to Tensors

1.1 What is a Tensor?

A tensor is a generalization of vectors and matrices to higher dimensions. While a vector is a one-dimensional array and a matrix is a two-dimensional array, a tensor can be an n-dimensional array, where n represents the tensor's order or mode. For example:

- A scalar is a tensor of order 0.

- A vector is a tensor of order 1.

- A matrix is a tensor of order 2.

- A three-way tensor (a cube of data) is a tensor of order 3.

Mathematically, a tensor can be represented as:

where are the dimensions of the tensor, and is the order of the tensor.

1.2 Notation and Terminology

In tensor algebra:

- Mode-n fibers: Analogous to rows and columns in matrices. For a tensor of order 3, mode-1 fibers are vectors obtained by fixing the second and third indices.

- Slices: 2D sections of a tensor obtained by fixing one index.

- Unfolding or matricization: The process of rearranging the elements of a tensor into a matrix.

2. Types of Tensor Decompositions

Tensor decompositions aim to break down a tensor into a sum of simpler components. There are several types of tensor decompositions, each useful for different applications.

2.1 Canonical Polyadic (CP) Decomposition

CP Decomposition is the most straightforward and widely used tensor decomposition. It expresses a tensor as a sum of rank-one tensors. For a tensor of order 3, the CP decomposition is:

where , , and are the factor matrices, and denotes the outer product. is the rank of the tensor.

Applications:

- Recommender systems: CP decomposition can be used to model the interactions between users, items, and contexts.

- Latent factor analysis: Extracting hidden structures in multi-dimensional data.

2.2 Tucker Decomposition

Tucker Decomposition is a more general form of tensor decomposition. It decomposes a tensor into a core tensor multiplied by a matrix along each mode. For a third-order tensor, the Tucker decomposition is:

where:

- is the core tensor.

- are the factor matrices.

- denotes the n-mode product.

Applications:

- Dimensionality reduction: Tucker decomposition can reduce the dimensions of data while preserving important features.

- Multilinear PCA: Tucker decomposition generalizes PCA to higher-order tensors.

2.3 Higher-Order Singular Value Decomposition (HOSVD)

HOSVD is an extension of the SVD to higher-order tensors. It generalizes the matrix SVD by decomposing a tensor into a core tensor and orthogonal matrices along each mode:

where is the core tensor, and are orthogonal matrices obtained from the SVD of the mode-n unfolding of the tensor.

Applications:

- Signal processing: HOSVD is used in multi-way signal analysis.

- Image compression: HOSVD can compress multi-dimensional image data efficiently.

2.4 Non-Negative Tensor Factorization (NTF)

NTF extends non-negative matrix factorization (NMF) to tensors. It decomposes a non-negative tensor into non-negative factors:

Applications:

- Topic modeling: NTF can be used to discover latent topics in text data.

- Bioinformatics: Analyzing multi-way biological data.

3. Practical Example of Tensor Decomposition

Let’s consider an example where we apply CP decomposition to a simple 3-way tensor representing user preferences for different items in different contexts.

3.1 Example Data

Assume we have a 3-way tensor representing users, items, and contexts. Each element of the tensor represents the preference score of user for item in context .

3.2 Applying CP Decomposition: Step-by-Step Calculation

Step 1: Define the Tensor

We start with a simple 3-way tensor of dimensions , where each element represents the preference score.

Step 2: Initialize Factor Matrices

For CP decomposition, we initialize the factor matrices randomly or using techniques such as SVD.

Step 3: Perform Alternating Least Squares (ALS)

Apply the ALS algorithm to iteratively update the factor matrices until convergence:

-

Update :

-

Update :

-

Update :

Step 4: Convergence Check

Check if the factor matrices have converged to stable values. If not, repeat the ALS updates.

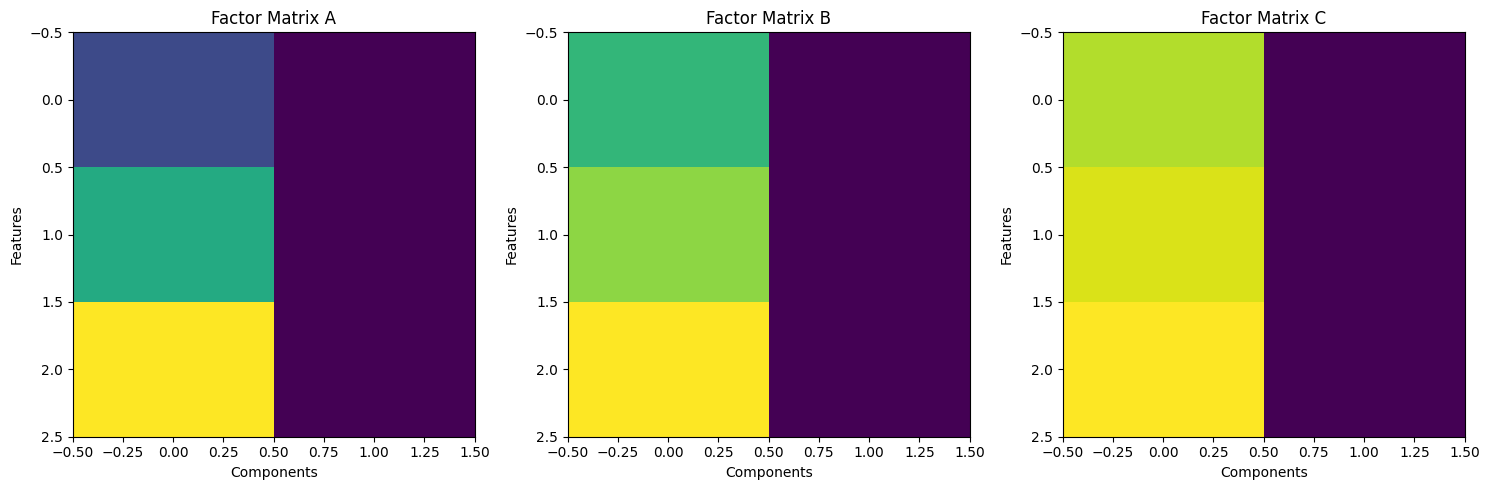

3.3 Visualization

After performing CP decomposition, the factor matrices can be visualized to understand the latent factors in the data.

Figure 1: Visualization of factor matrices from CP decomposition.

3.4 Example Calculation in NumPy

import numpy as np

# Step 1: Define the Tensor

T = np.array([

[[1, 2, 3], [4, 5, 6], [7, 8, 9]],

[[10, 11, 12], [13, 14, 15], [16, 17, 18]],

[[19, 20, 21], [22, 23, 24], [25, 26, 27]]

])

# Step 2: Initialize Random Factor Matrices

np.random.seed(42)

rank = 2

A = np.random.rand(T.shape[0], rank)

B = np.random.rand(T.shape[1], rank)

C = np.random.rand(T.shape[2], rank)

# Step 3: Alternating Least Squares (ALS) to Approximate CP Decomposition

def khatri_rao(matrices):

"""Compute the Khatri-Rao product (column-wise Kronecker product) of two matrices."""

A, B = matrices

return np.einsum('ik,jk->ijk', A, B).reshape(-1, A.shape[1])

def update_factor(T, A, B, C, mode):

"""Update a single factor matrix in CP decomposition."""

if mode == 0:

V = khatri_rao([C, B])

T_unfolded = T.reshape(T.shape[0], -1)

return np.linalg.lstsq(V, T_unfolded.T, rcond=None)[0].T

elif mode == 1:

V = khatri_rao([C, A])

T_unfolded = T.transpose(1, 0, 2).reshape(T.shape[1], -1)

return np.linalg.lstsq(V, T_unfolded.T, rcond=None)[0].T

elif mode == 2:

V = khatri_rao([B, A])

T_unfolded = T.transpose(2, 0, 1).reshape(T.shape[2], -1)

return np.linalg.lstsq(V, T_unfolded.T, rcond=None)[0].T

# Step 4: Iterate to Converge

for _ in range(50): # Iterate to converge

A = update_factor(T, A, B, C, 0)

B = update_factor(T, A, B, C, 1)

C = update_factor(T, A, B, C, 2)

# Display factor matrices

print("Factor matrix A:\n", A)

print("Factor matrix B:\n", B)

print("Factor matrix C:\n", C)

4. Applications of Tensor Decompositions

Tensor decompositions are widely used in various fields:

4.1 Recommender Systems

Tensor decompositions help in modeling multi-way interactions among users, items, and contexts, leading to better recommendations.

4.2 Signal Processing

In multi-way signal analysis, tensor decompositions are used to separate signals into their components, improving the analysis of complex data.

4.3 Computer Vision

Tensor decompositions are used in image compression and feature extraction, reducing the dimensionality of image data while preserving essential information.

4.4 Bioinformatics

In bioinformatics, tensor decompositions are applied to analyze multi-way biological data, such as gene expression data across different conditions and time points.

5. Conclusion

Tensor decompositions are a versatile and powerful tool for analyzing multi-dimensional data. By breaking down tensors into simpler components, they provide insights into the underlying structure of the data, enabling advanced applications in machine learning, signal processing, and more. Mastering tensor decompositions opens the door to more sophisticated data analysis techniques, particularly in areas where data complexity goes beyond what matrices can represent.